This article looks at hardware development as it faces the challenges of rapid technological change, increasing product complexity and the pressure to deliver faster while maintaining high quality. If you’re struggling with late discovery of problems that lead to redesigns and threaten a successful product launch or have inflexible product development phases, this article offers practical solutions to help you overcome these issues.

Current Challenges in Hardware Development

The hardware development landscape is being transformed by technologies like Artificial Intelligence (AI), Industry 4.0 (IR4), and the Internet of Things (IoT). At the same time, product developers face growing customer expectations and strict sustainability regulations that add complexity to product design and delivery.

As products become more complex and autonomous, traditional sequential design and testing methods fall short. In this constantly changing environment, successful product development increasingly relies on continuous learning and adaptability, making it essential to rethink how we approach hardware development.

Shift in the product development process

To navigate the complexities of modern hardware development, a shift in the development process is essential. Many projects fail because early design decisions become impossible or very costly to adjust within tight timelines. This calls for a new approach – one that focuses on continuous learning and adaptability.

Introducing learning loops and synchronized integration enables teams to stay flexible and adapt to emerging insights. This helps avoid costly mistakes that often come from late problem detection or redesign. Moving away from a “design then test” approach, teams need to adopt a “learn then design” mindset. This shift prioritizes learning to guide and improve the design process.

Introducing learning loops and synchronized integration enables teams to stay flexible and adapt to emerging insights. This helps avoid costly mistakes that often come from late problem detection or redesign. Moving away from a “design then test” approach, teams need to adopt a “learn then design” mindset. This shift prioritizes learning to guide and improve the design process.

This article will explore key enablers that support this shift, focusing on how they help remove dependencies and promote robust learning loops and integration points. The shift prioritizes modularization, planned learning loops, and value-based slicing. These strategies help accelerate development and keep adapatability in today’s fast-paced hardware environment.

Modularization

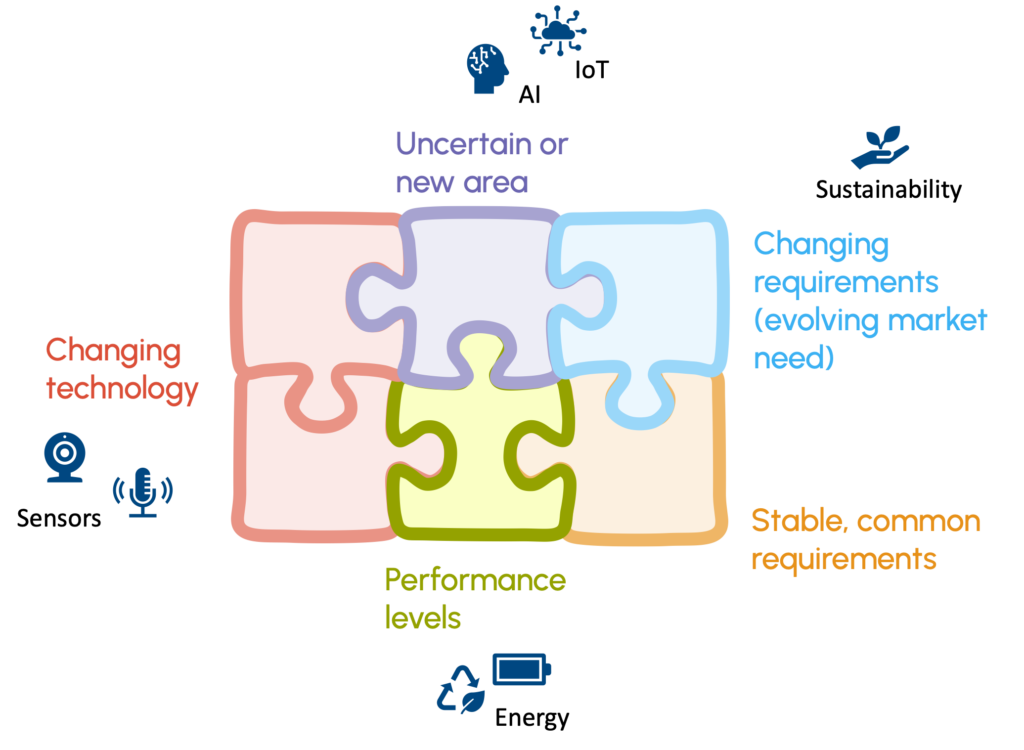

Modularization has long been a powerful strategy in hardware development. It helps reduce complexity and provides economies of scale. It also gives flexibility to customize products for different customers. However, modularization must now evolve to support learning-based development processes.

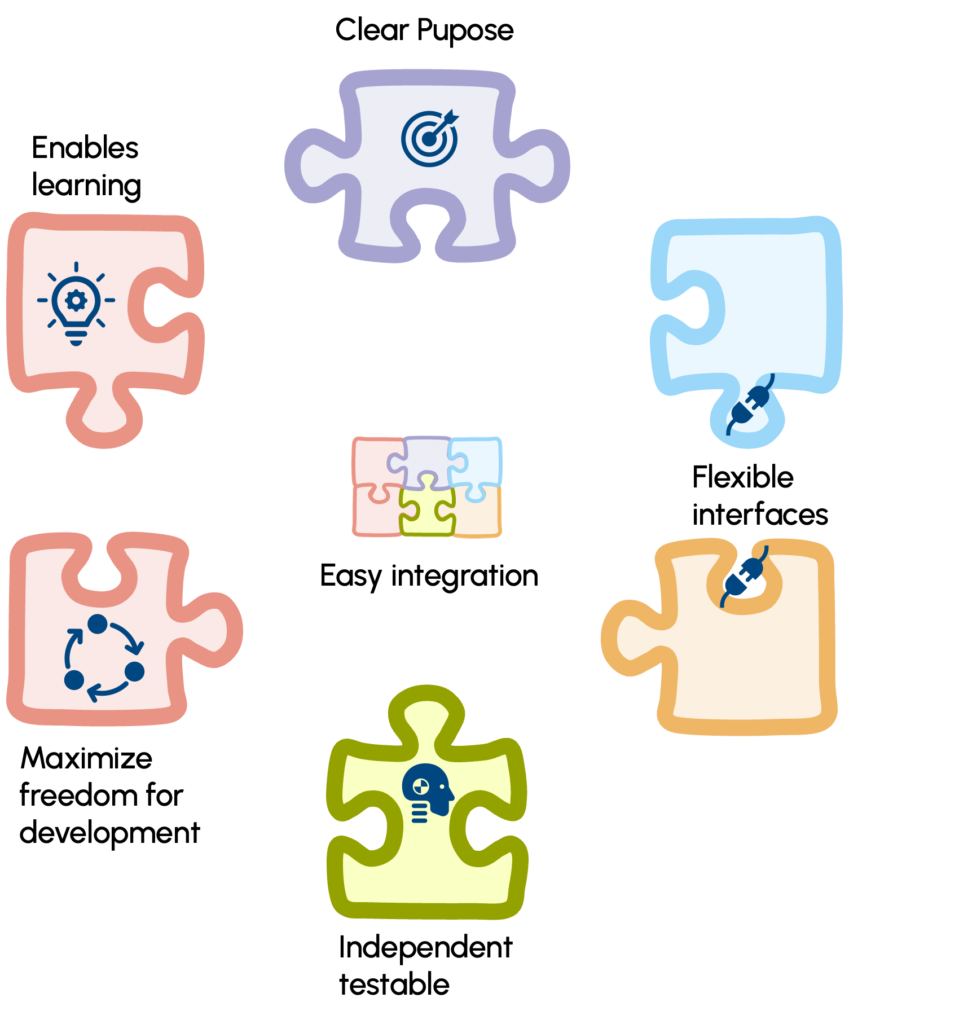

Modules should not just reduce complexity, but also foster adaptability and innovation. When defining modules, several attributes need to be considered:

- Uncertainty: Areas that are unknown and where it is difficult to predict the impact on the product

- Fast evolving: Rapidly changing or evolving technology

- Adaptability: Capability to adjust for changing requirements or performance levels

- Stability: Elements with stable, common requirements that do not require frequent changes.

In this article, we distinguish between components and modules.

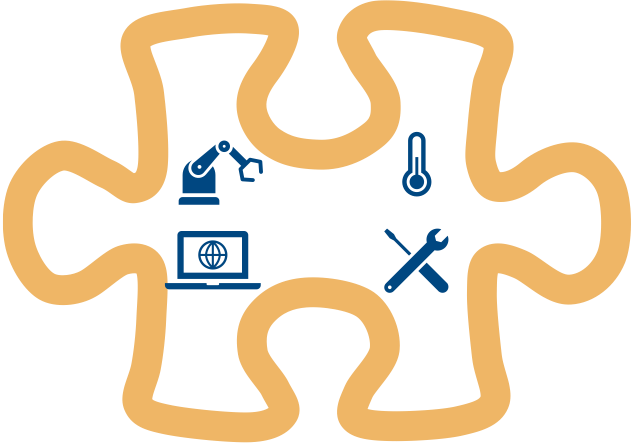

A Component is an individual part or element within a system that contribute to a system’s functionality. Each component is assigned a specific role and interacts with other components to perform its function. For instance, in a hardware system, components are sensors, processors, or actuators, each in itself playing a particular part to achieve the system’s overall objective. Components are essential building blocks but are typically interdependent, requiring close coordination with other parts to function within the larger system.

A Module, on the other hand, is a self-contained unit within a system that encapsulates a specific sub-function or task. Unlike components, modules are designed to be relatively independent, with well-defined, felxible interfaces for interacting with other modules.

This modular structure organizes the system into manageable parts, facilitating development, modification, and enabling continuous learning. Modules can be independently developed, upgraded, or replaced without disrupting the entire system, providing flexibility and adaptability. They also allow for customization, as modules can be adjusted to meet specific needs without altering the core system architecture. It is important to note that it does not represent an overarching design idea or blueprint.

To illustrate how modular design is applied in practice, consider the following examples:

- Battery Management System (BMS): Found in battery-operated devices, this module independently monitors and regulates battery health and charging, interacting with the device via straightforward control lines.

- Sensor Array Module: A self-contained unit for environmental monitoring, integrating various sensors (e.g., temperature, pressure, or proximity). Individual sensors within the module can be replaced or upgraded as needed. The module preprocesses sensor data internally, outputting consolidated, standardized information for easy integration with the central system. This design ensures flexibility, simplifies maintenance, and reduces dependency on central processing.

- Car Seat Module: This module combines the physical seat structure with integrated electronic components, such as motors (for adjustments), heating elements, sensors (e.g., for weight detection or seatbelt alerts), and airbags. It operates autonomously through independent control circuits, while well-defined connectors ensure seamless integration. These connectors enable the module to be easily replaced or upgraded without requiring significant modifications to the vehicle’s overall design.

Modules are part of and build a system architecture. Effective architecture balances many considerations, aiming for loosely coupled modules that align with product attributes, create development freedom, and enables learning and innovation.

General principles for system architecture:

- Prioritize Robustness in Interfaces:

Design interfaces to handle potential changes in requirements. For example, provide additional signal capacity in connectors to support future needs. - Minimize Interdependencies:

Strive for low coupling between modules. Modules should communicate only through well-defined interfaces, and their internal workings should be hidden to reduce complexity during integration.

A change in one module should not introduce a need (cost) to change other modules.

- Balance Flexibility and Cost:

While flexibility is crucial, avoid overdesigning modules. Weigh the potential benefits of future adaptability against the immediate costs and complexities. - Support Learning and Innovation:

Design the architecture to allow for experimentation with alternative designs. For instance, allow for testing of new module variants without disrupting the existing system.

By following these principles, modularization becomes a key enabler for accelerating hardware development through learning and innovation.

Integrate and Iterate

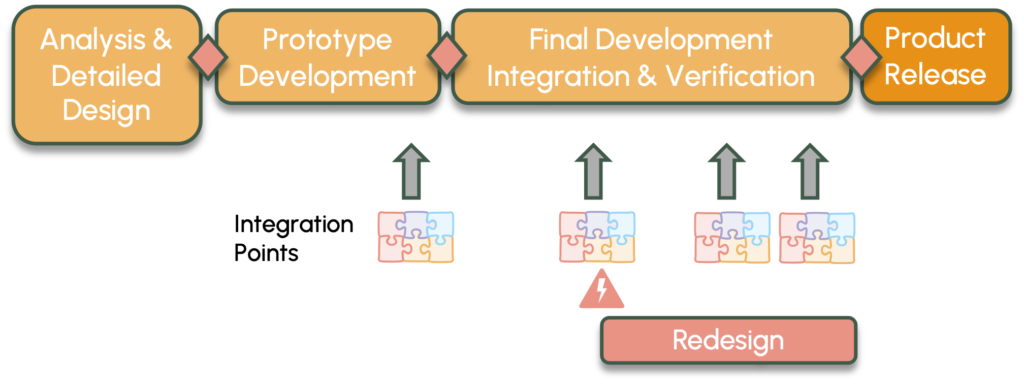

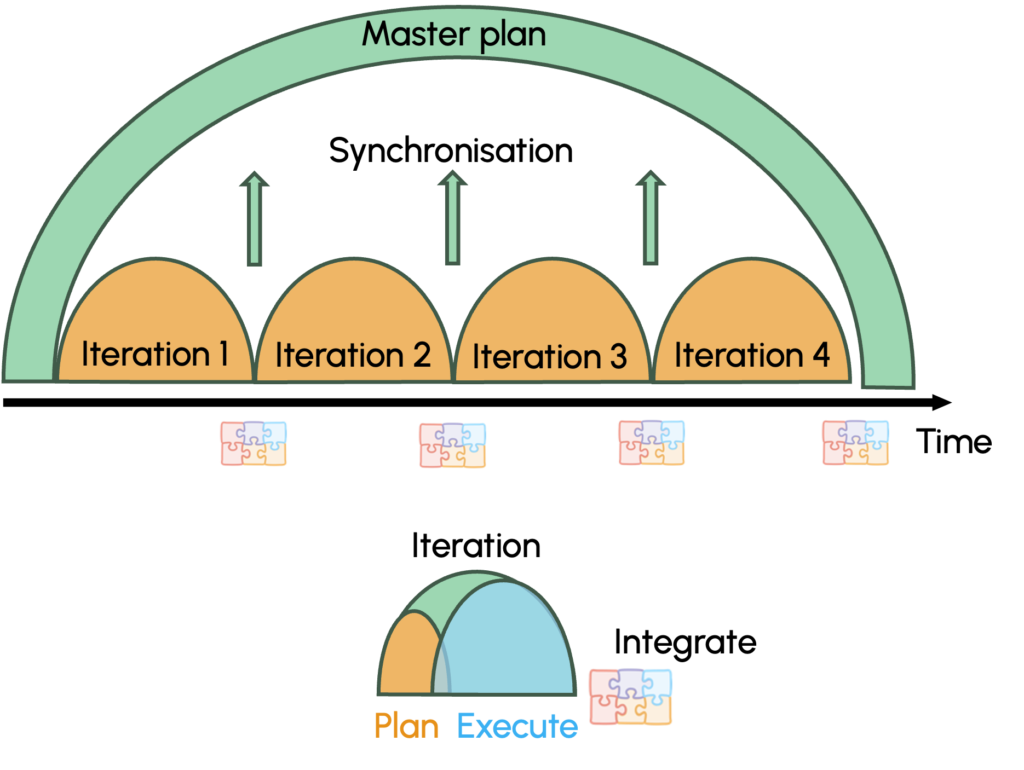

Traditional hardware development processes, such as the V-Model and phase-gate approaches, follow a rigid sequence of phases with fixed targets. These methods help set clear goals and plan around external milestones, but they come with challenges. Modules are developed separately and brought together at integration points. These integration points test the modules’ interoperability and alignment with system requirements, but they often occur late in the project. This increases the risk of uncovering critical problems too late to fix without delays, shortcuts, or compromises in requirements.

Traditional hardware development processes, such as the V-Model and phase-gate approaches, follow a rigid sequence of phases with fixed targets. These methods help set clear goals and plan around external milestones, but they come with challenges.

Modules are developed separately and brought together at integration points. These integration points test the modules’ interoperability and alignment with system requirements, but they often occur late in the project. This increases the risk of uncovering critical problems too late to fix without delays, shortcuts, or compromises in requirements.

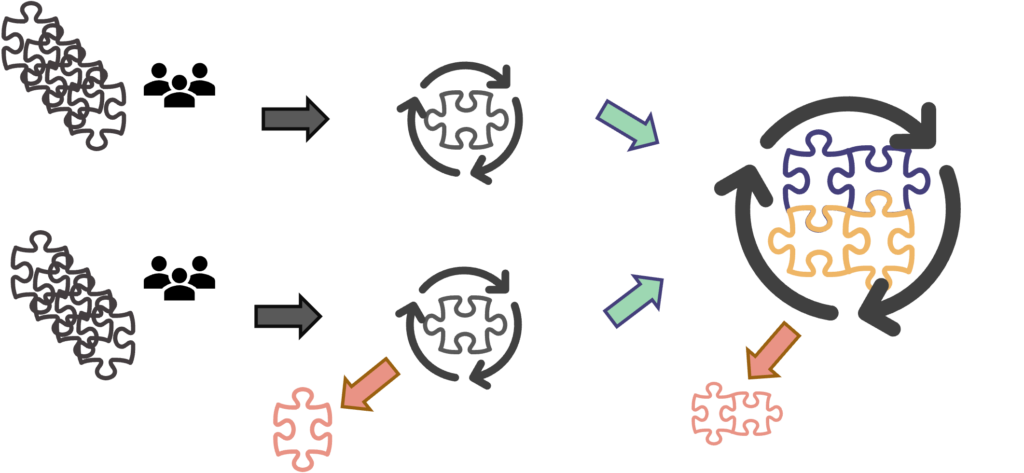

In a learning-based approach, these risks are mitigated through planned learning cycles. These cycles involve repeated planning, evaluation, design iteration, and testing before final approval. This helps eliminate unworkable concepts early and shortens learning cycles with more frequent integration and testing.

These learning cycles are embedded in a rolling wave planning. This method combines a long-term master plan with short-term iteration cycles. The master plan provides a long-term view, using backward planning to set key deadlines, while iteration plans focus on learning cycles and short-term adjustments based on emerging challenges. Possible adjustments to the master plan are based on fact-based learning and provide a realistic, transparent and updated view of reality.

This approach allows teams to learn and adapt quickly, synchronizing short-term goals with the overall project timeline.

Value based work packages

Work packages are structured, manageable units of product development, designed to add value at each step toward the final product. Value can take various forms, such as enhanced functionality, improved performance, or higher quality. These work packages are organized at different levels. At the highest level, work packages might represent large phases of development, such as creating a prototype or completing a validation cycle. At intermediate levels, they could correspond to modules or subsystems, like developing a battery management system or a sensor array. Together, the contributions of each work package build towards delivering measurable value in the finished system.

Smaller work packages offer several advantages:

- Early and Frequent Learning: Iterating over small work packages provides faster feedback and learning cycles.

- Customer and Market Value: Addressing customer needs in small increments allows teams to deliver market-ready solutions sooner.

- Efficiency: Smaller packages reduce development time, lower costs, and improve product quality due to frequent integration.

- Risk Mitigation: Iterating over smaller packages reduces both product and process risks, as issues are caught faster and by that can be addressed early.

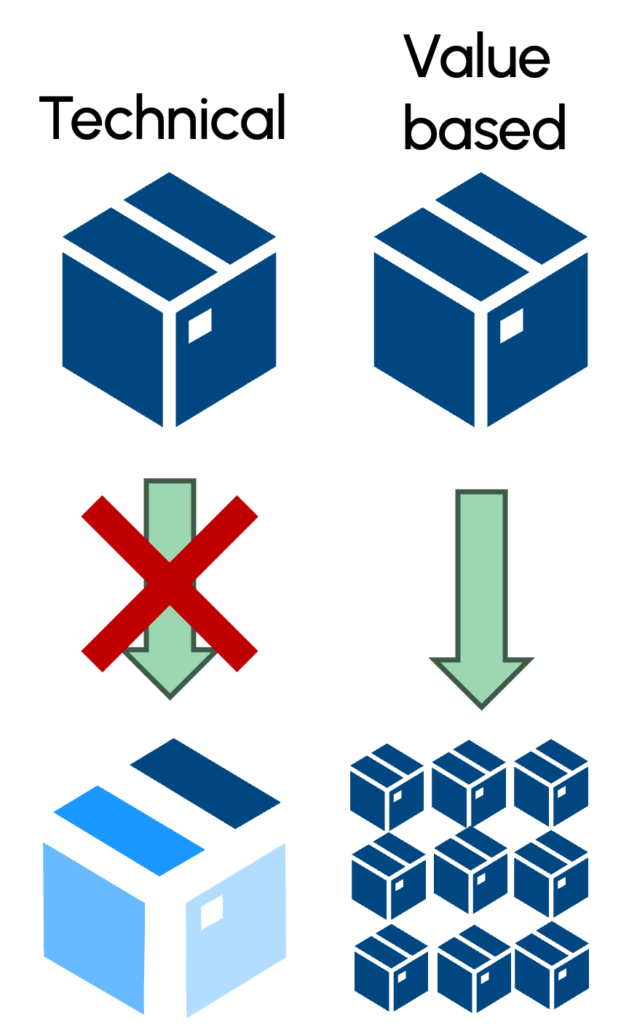

The process of breaking down large work into smaller, meaningful chunks is called slicing. While simple in theory, it can be challenging for complex tasks. Slicing can be approached from two perspectives: technical slicing and value-based slicing.

Technical slicing divides work based on the system’s architecture or functionality, such as isolating tasks for specific subsystems like electronics, software, or mechanics. This approach, while organized, often leads to interdependent tasks that cannot deliver standalone value until fully integrated.

On the other hand, value-based slicing focuses on creating smaller pieces of work that independently deliver value, whether through functionality, performance, quality, or usability. For instance, instead of separately developing hardware and software components, a value-based slice might deliver an integrated prototype of a specific feature, providing immediate feedback and accelerating learning.

Effective slicing combines technical feasibility with meaningful value delivery, ensuring every piece contributes to the end product in a way that supports user needs and project learning objectives.

Horizontal vs. Vertical Slicing

The concepts of technical and value-based slicing can be visualized through horizontal and vertical slicing. A helpful analogy is slicing bread – each slice should still be functional enough to make a sandwich. Similarly, every work package should meaningfully contribute to the system’s overall purpose, whether through progress, learning, or delivering value.

Horizontal Slicing divides work along module or subsystem boundaries, such as separating tasks for hardware, software, or mechanics. While it helps organize complex products into manageable parts, the drawback is that standalone value is often not realized until all pieces are integrated. This approach can delay the discovery of integration challenges or design issues until later in the process.

Vertical slicing, by contrast, cuts through multiple system layers to deliver tasks or prototypes that provide functionality, usability, or learning in a complete form. For example, instead of developing separate subsystems for sensors and control logic, a vertical slice might deliver a working sensor module integrated with basic software to demonstrate its functionality. This approach accelerates learning, enables more frequent evaluations, and delivers value earlier in the development process.

Conclusion

All three elements of the learning-based approach – modularization, planned learning cycles, and value-based work packages – play a vital role in accelerating timelines, reducing risks, and increasing flexibility in hardware development.

Modularization and a well-designed module architecture provide the freedom to develop and innovate different system parts in dependently. This flexibility allows teams to progress without being slowed by dependencies. Planned learning cycles, on the other hand, enable frequent integration and continuous learning of the modules. These mechanisms form the foundation of a more adaptive process. This potential is leveraged when work packages are designed with the focus on learning and business-value which allows frequent evaluations, iterative learning, and early releases.

If you’re curious about exploring a learning-based approach to hardware development or applying it in your area, please don’t hesitate to contact me.

Appendix

Modules at Every Level: A Scalable Concept

The principles around modules described in this article do not only apply to large-scale elements such as vehicle modules (e.g., car seats or powertrain assemblies). The modular concept is scalable and equally relevant at all levels of a system. For example, within a vehicle module like a car seat, smaller sub-modules may exist, such as the heating system, motorized adjustment mechanisms, or embedded sensors. These sub-modules further break down into even smaller modules, such as individual circuits, components, or even integrated chips.

This scalability can be compared to the human body: the body functions as a system of modules, where each organ (like the heart or lungs) serves a distinct function. Each organ itself comprises smaller modules, down to the cellular level, where individual cells operate as self-contained units contributing to the larger system. Similarly, a modular architecture in a cyber physical system can be envisioned as a hierarchy where every level, from the largest modules down to their smallest constituent parts, adheres to the same principles of modular design, independence, and well-defined interfaces.

This multi-level application of modularity ensures flexibility, maintainability, and scalability in product development, allowing for efficient upgrades, replacements, or refinements at any level of the system.

Background of the author

Dirk Holste is an experienced transformation coach with extensive practical experience in guiding the creation of valuable organisational capabilities. He is an experienced trainer, mentor and guide for those leading or participating in change work.